Table of Contents

- 14.1. The

MyISAMStorage Engine - 14.2. The

InnoDBStorage Engine - 14.2.1.

InnoDBOverview - 14.2.2.

InnoDBContact Information - 14.2.3.

InnoDBConfiguration - 14.2.4.

InnoDBStartup Options and System Variables - 14.2.5. Creating the

InnoDBTablespace - 14.2.6. Creating and Using

InnoDBTables - 14.2.7. Adding and Removing

InnoDBData and Log Files - 14.2.8. Backing Up and Recovering an

InnoDBDatabase - 14.2.9. Moving an

InnoDBDatabase to Another Machine - 14.2.10.

InnoDBTransaction Model and Locking - 14.2.11.

InnoDBPerformance Tuning Tips - 14.2.12. Implementation of Multi-Versioning

- 14.2.13.

InnoDBTable and Index Structures - 14.2.14.

InnoDBFile Space Management and Disk I/O - 14.2.15.

InnoDBError Handling - 14.2.16. Restrictions on

InnoDBTables - 14.2.17.

InnoDBTroubleshooting

- 14.2.1.

- 14.3. The

MERGEStorage Engine - 14.4. The

MEMORY(HEAP) Storage Engine - 14.5. The

BDB(BerkeleyDB) Storage Engine - 14.6. The

EXAMPLEStorage Engine - 14.7. The

FEDERATEDStorage Engine - 14.8. The

ARCHIVEStorage Engine - 14.9. The

CSVStorage Engine - 14.10. The

BLACKHOLEStorage Engine

MySQL supports several storage engines that act as handlers for different table types. MySQL storage engines include both those that handle transaction-safe tables and those that handle non-transaction-safe tables:

MyISAMmanages non-transactional tables. It provides high-speed storage and retrieval, as well as fulltext searching capabilities.MyISAMis supported in all MySQL configurations, and is the default storage engine unless you have configured MySQL to use a different one by default.The

MEMORYstorage engine provides in-memory tables. TheMERGEstorage engine allows a collection of identicalMyISAMtables to be handled as a single table. LikeMyISAM, theMEMORYandMERGEstorage engines handle non-transactional tables, and both are also included in MySQL by default.Note: The

MEMORYstorage engine formerly was known as theHEAPengine.The

InnoDBandBDBstorage engines provide transaction-safe tables.BDBis included in MySQL-Max binary distributions on those operating systems that support it.InnoDBis also included by default in all MySQL 5.0 binary distributions. In source distributions, you can enable or disable either engine by configuring MySQL as you like.The

EXAMPLEstorage engine is a “stub” engine that does nothing. You can create tables with this engine, but no data can be stored in them or retrieved from them. The purpose of this engine is to serve as an example in the MySQL source code that illustrates how to begin writing new storage engines. As such, it is primarily of interest to developers.NDB Clusteris the storage engine used by MySQL Cluster to implement tables that are partitioned over many computers. It is available in MySQL-Max 5.0 binary distributions. This storage engine is currently supported on Linux, Solaris, and Mac OS X only. We intend to add support for this engine on other platforms, including Windows, in future MySQL releases.The

ARCHIVEstorage engine is used for storing large amounts of data without indexes with a very small footprint.The

CSVstorage engine stores data in text files using comma-separated values format.The

BLACKHOLEstorage engine accepts but does not store data and retrievals always return an empty set.The

FEDERATEDstorage engine was added in MySQL 5.0.3. This engine stores data in a remote database. Currently, it works with MySQL only, using the MySQL C Client API. In future releases, we intend to enable it to connect to other data sources using other drivers or client connection methods.

This chapter describes each of the MySQL storage engines except for

NDB Cluster, which is covered in

Chapter 15, MySQL Cluster.

When you create a new table, you can specify which storage engine to

use by adding an ENGINE or

TYPE table option to the CREATE

TABLE statement:

CREATE TABLE t (i INT) ENGINE = INNODB; CREATE TABLE t (i INT) TYPE = MEMORY;

The older term TYPE is supported as a synonym for

ENGINE for backward compatibility, but

ENGINE is the preferred term and

TYPE is deprecated.

If you omit the ENGINE or TYPE

option, the default storage engine is used. Normally, this is

MyISAM, but you can change it by using the

--default-storage-engine or

--default-table-type server startup option, or by

setting the storage_engine or

table_type system variable.

When MySQL is installed on Windows using the MySQL Configuration

Wizard, the InnoDB storage engine can be selected

as the default instead of MyISAM. See

Section 2.3.4.6, “The Database Usage Dialog”.

To convert a table from one storage engine to another, use an

ALTER TABLE statement that indicates the new

engine:

ALTER TABLE t ENGINE = MYISAM; ALTER TABLE t TYPE = BDB;

See Section 13.1.5, “CREATE TABLE Syntax”, and

Section 13.1.2, “ALTER TABLE Syntax”.

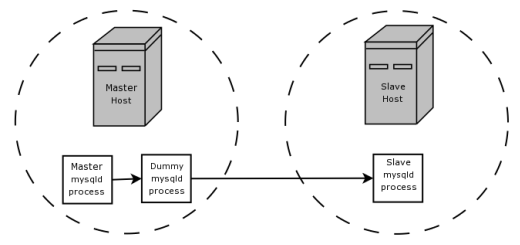

If you try to use a storage engine that is not compiled in or that

is compiled in but deactivated, MySQL instead creates a table using

the default storage engine, usually MyISAM. This

behavior is convenient when you want to copy tables between MySQL

servers that support different storage engines. (For example, in a

replication setup, perhaps your master server supports transactional

storage engines for increased safety, but the slave servers use only

non-transactional storage engines for greater speed.)

This automatic substitution of the default storage engine for unavailable engines can be confusing for new MySQL users. A warning is generated whenever a storage engine is automatically changed.

For new tables, MySQL always creates an .frm

file to hold the table and column definitions. The table's index and

data may be stored in one or more other files, depending on the

storage engine. The server creates the .frm

file above the storage engine level. Individual storage engines

create any additional files required for the tables that they

manage.

A database may contain tables of different types. That is, tables need not all be created with the same storage engine.

Transaction-safe tables (TSTs) have several advantages over non-transaction-safe tables (NTSTs):

They are safer. Even if MySQL crashes or you get hardware problems, you can get your data back, either by automatic recovery or from a backup plus the transaction log.

You can combine many statements and accept them all at the same time with the

COMMITstatement (if autocommit is disabled).You can execute

ROLLBACKto ignore your changes (if autocommit is disabled).If an update fails, all of your changes are reverted. (With non-transaction-safe tables, all changes that have taken place are permanent.)

Transaction-safe storage engines can provide better concurrency for tables that get many updates concurrently with reads.

You can combine transaction-safe and non-transaction-safe tables in

the same statements to get the best of both worlds. However,

although MySQL supports several transaction-safe storage engines,

for best results, you should not mix different storage engines

within a transaction with autocommit disabled. For example, if you

do this, changes to non-transaction-safe tables still are committed

immediately and cannot be rolled back. For information about this

and other problems that can occur in transactions that use mixed

storage engines, see Section 13.4.1, “START TRANSACTION, COMMIT, and ROLLBACK Syntax”.

Non-transaction-safe tables have several advantages of their own, all of which occur because there is no transaction overhead:

Much faster

Lower disk space requirements

Less memory required to perform updates

MyISAM is the default storage engine. It is

based on the older ISAM code but has many

useful extensions. (Note that MySQL 5.0 does

not support ISAM.)

Each MyISAM table is stored on disk in three

files. The files have names that begin with the table name and

have an extension to indicate the file type. An

.frm file stores the table format. The data

file has an .MYD (MYData)

extension. The index file has an .MYI

(MYIndex) extension.

To specify explicitly that you want a MyISAM

table, indicate that with an ENGINE table

option:

CREATE TABLE t (i INT) ENGINE = MYISAM;

The older term TYPE is supported as a synonym

for ENGINE for backward compatibility, but

ENGINE is the preferred term and

TYPE is deprecated.

Normally, it is unnecesary to use ENGINE to

specify the MyISAM storage engine.

MyISAM is the default engine unless the default

has been changed. To ensure that MyISAM is used

in situations where the default might have been changed, include

the ENGINE option explicitly.

You can check or repair MyISAM tables with the

mysqlcheck client or

myisamchk utility. You can also compress

MyISAM tables with

myisampack to take up much less space. See

Section 8.9, “mysqlcheck — A Table Maintenance and Repair Program”, Section 5.10.4.1, “Using myisamchk for Crash Recovery”,

and Section 8.4, “myisampack — Generate Compressed, Read-Only MyISAM Tables”.

MyISAM tables have the following

characteristics:

All data values are stored with the low byte first. This makes the data machine and operating system independent. The only requirements for binary portability are that the machine uses two's-complement signed integers and IEEE floating-point format. These requirements are widely used among mainstream machines. Binary compatibility might not be applicable to embedded systems, which sometimes have peculiar processors.

There is no significant speed penalty for storing data low byte first; the bytes in a table row normally are unaligned and it takes little more processing to read an unaligned byte in order than in reverse order. Also, the code in the server that fetches column values is not time critical compared to other code.

All numeric key values are stored with the high byte first to allow better index compression.

Large files (up to 63-bit file length) are supported on filesystems and operating systems that support large files.

Dynamic-sized rows are much less fragmented when mixing deletes with updates and inserts. This is done by automatically combining adjacent deleted blocks and by extending blocks if the next block is deleted.

The maximum number of indexes per

MyISAMtable is 64. This can be changed by recompiling. Beginning with MySQL 5.0.18, you can configure the build by invoking configure with the--with-max-indexes=option, whereNNis the maximum number of indexes to permit perMyISAMtable.Nmust be less thann or equal to 128. Before MySQL 5.0.18, you must change the source.The maximum number of columns per index is 16.

The maximum key length is 1000 bytes. This can also be changed by changing the source and recompiling. For the case of a key longer than 250 bytes, a larger key block size than the default of 1024 bytes is used.

When rows are inserted in sorted order (as when you are using an

AUTO_INCREMENTcolumn), the index tree is split so that the high node only contains one key. This improves space utilization in the index tree.Internal handling of one

AUTO_INCREMENTcolumn per table is supported.MyISAMautomatically updates this column forINSERTandUPDATEoperations. This makesAUTO_INCREMENTcolumns faster (at least 10%). Values at the top of the sequence are not reused after being deleted. (When anAUTO_INCREMENTcolumn is defined as the last column of a multiple-column index, reuse of values deleted from the top of a sequence does occur.) TheAUTO_INCREMENTvalue can be reset withALTER TABLEor myisamchk.Dynamic-sized rows are much less fragmented when mixing deletes with updates and inserts. This is done by automatically combining adjacent deleted blocks and by extending blocks if the next block is deleted.

If a table has no free blocks in the middle of the data file, you can

INSERTnew rows into it at the same time that other threads are reading from the table. (These are known as concurrent inserts.) A free block can occur as a result of deleting rows or an update of a dynamic length row with more data than its current contents. When all free blocks are used up (filled in), future inserts become concurrent again. See Section 7.3.3, “Concurrent Inserts”.You can put the data file and index file on different directories to get more speed with the

DATA DIRECTORYandINDEX DIRECTORYtable options toCREATE TABLE. See Section 13.1.5, “CREATE TABLESyntax”.BLOBandTEXTcolumns can be indexed.NULLvalues are allowed in indexed columns. This takes 0–1 bytes per key.Each character column can have a different character set. See Chapter 10, Character Set Support.

There is a flag in the

MyISAMindex file that indicates whether the table was closed correctly. If mysqld is started with the--myisam-recoveroption,MyISAMtables are automatically checked when opened, and are repaired if the table wasn't closed properly.myisamchk marks tables as checked if you run it with the

--update-stateoption. myisamchk --fast checks only those tables that don't have this mark.myisamchk --analyze stores statistics for portions of keys, as well as for entire keys.

myisampack can pack

BLOBandVARCHARcolumns.

MyISAM also supports the following features:

Support for a true

VARCHARtype; aVARCHARcolumn starts with a length stored in one or two bytes.Tables with

VARCHARcolumns may have fixed or dynamic row length.The sum of the lengths of the

VARCHARandCHARcolumns in a table may be up to 64KB.A hashed computed index can be used for

UNIQUE. This allows you to haveUNIQUEon any combination of columns in a table. (However, you cannot search on aUNIQUEcomputed index.)

Additional resources

A forum dedicated to the

MyISAMstorage engine is available at http://forums.mysql.com/list.php?21.

The following options to mysqld can be used

to change the behavior of MyISAM tables. For

additional information, see Section 5.2.1, “mysqld Command Options”.

Set the mode for automatic recovery of crashed

MyISAMtables.Don't flush key buffers between writes for any

MyISAMtable.Note: If you do this, you should not access

MyISAMtables from another program (such as from another MySQL server or with myisamchk) when the tables are in use. Doing so risks index corruption. Using--external-lockingdoes not eliminate this risk.

The following system variables affect the behavior of

MyISAM tables. For additional information,

see Section 5.2.2, “Server System Variables”.

bulk_insert_buffer_sizeThe size of the tree cache used in bulk insert optimization. Note: This is a limit per thread!

myisam_max_extra_sort_file_sizeUsed to help MySQL to decide when to use the slow but safe key cache index creation method. Note: This parameter was given in bytes before MySQL 5.0.6, when it was removed.

myisam_max_sort_file_sizeDon't use the fast sort index method to create an index if the temporary file would become larger than this. Note: This parameter is given in bytes.

myisam_sort_buffer_sizeSet the size of the buffer used when recovering tables.

Automatic recovery is activated if you start

mysqld with the

--myisam-recover option. In this case, when the

server opens a MyISAM table, it checks

whether the table is marked as crashed or whether the open count

variable for the table is not 0 and you are running the server

with external locking disabled. If either of these conditions is

true, the following happens:

The server checks the table for errors.

If the server finds an error, it tries to do a fast table repair (with sorting and without re-creating the data file).

If the repair fails because of an error in the data file (for example, a duplicate-key error), the server tries again, this time re-creating the data file.

If the repair still fails, the server tries once more with the old repair option method (write row by row without sorting). This method should be able to repair any type of error and has low disk space requirements.

If the recovery wouldn't be able to recover all rows from

previously completed statementas and you didn't specify

FORCE in the value of the

--myisam-recover option, automatic repair

aborts with an error message in the error log:

Error: Couldn't repair table: test.g00pages

If you specify FORCE, a warning like this is

written instead:

Warning: Found 344 of 354 rows when repairing ./test/g00pages

Note that if the automatic recovery value includes

BACKUP, the recovery process creates files

with names of the form

tbl_name-datetime.BAK

MyISAM tables use B-tree indexes. You can

roughly calculate the size for the index file as

(key_length+4)/0.67, summed over all keys.

This is for the worst case when all keys are inserted in sorted

order and the table doesn't have any compressed keys.

String indexes are space compressed. If the first index part is

a string, it is also prefix compressed. Space compression makes

the index file smaller than the worst-case figure if a string

column has a lot of trailing space or is a

VARCHAR column that is not always used to the

full length. Prefix compression is used on keys that start with

a string. Prefix compression helps if there are many strings

with an identical prefix.

In MyISAM tables, you can also prefix

compress numbers by specifying the

PACK_KEYS=1 table option when you create the

table. Numbers are stored with the high byte first, so this

helps when you have many integer keys that have an identical

prefix.

MyISAM supports three different storage

formats. Two of them, fixed and dynamic format, are chosen

automatically depending on the type of columns you are using.

The third, compressed format, can be created only with the

myisampack utility.

When you use CREATE TABLE or ALTER

TABLE for a table that has no BLOB

or TEXT columns, you can force the table

format to FIXED or DYNAMIC

with the ROW_FORMAT table option. This causes

CHAR and VARCHAR columns

to become CHAR for FIXED

format, or VARCHAR for

DYNAMIC format.

You can decompress tables by specifying

ROW_FORMAT=DEFAULT with ALTER

TABLE.

See Section 13.1.5, “CREATE TABLE Syntax”, for information about

ROW_FORMAT.

Static format is the default for MyISAM

tables. It is used when the table contains no variable-length

columns (VARCHAR,

VARBINARY, BLOB, or

TEXT). Each row is stored using a fixed

number of bytes.

Of the three MyISAM storage formats, static

format is the simplest and most secure (least subject to

corruption). It is also the fastest of the on-disk formats due

to the ease with which rows in the data file can be found on

disk: To look up a row based on a row number in the index,

multiply the row number by the row length to calculate the row

position. Also, when scanning a table, it is very easy to read

a constant number of rows with each disk read operation.

The security is evidenced if your computer crashes while the

MySQL server is writing to a fixed-format

MyISAM file. In this case,

myisamchk can easily determine where each

row starts and ends, so it can usually reclaim all rows except

the partially written one. Note that MyISAM

table indexes can always be reconstructed based on the data

rows.

Static-format tables have these characteristics:

CHARcolumns are space-padded to the column width. This is also true forNUMERICandDECIMALcolumns created before MySQL 5.0.3.BINARYcolumns are space-padded to the column width before MySQL 5.0.15. As of 5.0.15,BINARYcolumns are padded with0x00bytes.Very quick.

Easy to cache.

Easy to reconstruct after a crash, because rows are located in fixed positions.

Reorganization is unnecessary unless you delete a huge number of rows and want to return free disk space to the operating system. To do this, use

OPTIMIZE TABLEor myisamchk -r.Usually require more disk space than dynamic-format tables.

Dynamic storage format is used if a MyISAM

table contains any variable-length columns

(VARCHAR, VARBINARY,

BLOB, or TEXT), or if

the table was created with the

ROW_FORMAT=DYNAMIC table option.

Dynamic format is a little more complex than static format because each row has a header that indicates how long it is. A row can become fragmented (stored in non-contiguous pieces) when it is made longer as a result of an update.

You can use OPTIMIZE TABLE or

myisamchk -r to defragment a table. If you

have fixed-length columns that you access or change frequently

in a table that also contains some variable-length columns, it

might be a good idea to move the variable-length columns to

other tables just to avoid fragmentation.

Dynamic-format tables have these characteristics:

All string columns are dynamic except those with a length less than four.

Each row is preceded by a bitmap that indicates which columns contain the empty string (for string columns) or zero (for numeric columns). Note that this does not include columns that contain

NULLvalues. If a string column has a length of zero after trailing space removal, or a numeric column has a value of zero, it is marked in the bitmap and not saved to disk. Non-empty strings are saved as a length byte plus the string contents.Much less disk space usually is required than for fixed-length tables.

Each row uses only as much space as is required. However, if a row becomes larger, it is split into as many pieces as are required, resulting in row fragmentation. For example, if you update a row with information that extends the row length, the row becomes fragmented. In this case, you may have to run

OPTIMIZE TABLEor myisamchk -r from time to time to improve performance. Use myisamchk -ei to obtain table statistics.More difficult than static-format tables to reconstruct after a crash, because rows may be fragmented into many pieces and links (fragments) may be missing.

The expected row length for dynamic-sized rows is calculated using the following expression:

3 + (

number of columns+ 7) / 8 + (number of char columns) + (packed size of numeric columns) + (length of strings) + (number of NULL columns+ 7) / 8There is a penalty of 6 bytes for each link. A dynamic row is linked whenever an update causes an enlargement of the row. Each new link is at least 20 bytes, so the next enlargement probably goes in the same link. If not, another link is created. You can find the number of links using myisamchk -ed. All links may be removed with

OPTIMIZE TABLEor myisamchk -r.

Compressed storage format is a read-only format that is generated with the myisampack tool. Compressed tables can be uncompressed with myisamchk.

Compressed tables have the following characteristics:

Compressed tables take very little disk space. This minimizes disk usage, which is helpful when using slow disks (such as CD-ROMs).

Each row is compressed separately, so there is very little access overhead. The header for a row takes up one to three bytes depending on the biggest row in the table. Each column is compressed differently. There is usually a different Huffman tree for each column. Some of the compression types are:

Suffix space compression.

Prefix space compression.

Numbers with a value of zero are stored using one bit.

If values in an integer column have a small range, the column is stored using the smallest possible type. For example, a

BIGINTcolumn (eight bytes) can be stored as aTINYINTcolumn (one byte) if all its values are in the range from-128to127.If a column has only a small set of possible values, the data type is converted to

ENUM.A column may use any combination of the preceding compression types.

Can be used for fixed-length or dynamic-length rows.

The file format that MySQL uses to store data has been extensively tested, but there are always circumstances that may cause database tables to become corrupted. The following discussion describes how this can happen and how to handle it.

Even though the MyISAM table format is very

reliable (all changes to a table made by an SQL statement are

written before the statement returns), you can still get

corrupted tables if any of the following events occur:

The mysqld process is killed in the middle of a write.

An unexpected computer shutdown occurs (for example, the computer is turned off).

Hardware failures.

You are using an external program (such as myisamchk) to modify a table that is being modified by the server at the same time.

A software bug in the MySQL or

MyISAMcode.

Typical symptoms of a corrupt table are:

You get the following error while selecting data from the table:

Incorrect key file for table: '...'. Try to repair it

Queries don't find rows in the table or return incomplete results.

You can check the health of a MyISAM table

using the CHECK TABLE statement, and repair

a corrupted MyISAM table with

REPAIR TABLE. When

mysqld is not running, you can also check

or repair a table with the myisamchk

command. See Section 13.5.2.3, “CHECK TABLE Syntax”,

Section 13.5.2.6, “REPAIR TABLE Syntax”, and

Section 8.2, “myisamchk — MyISAM Table-Maintenance Utility”.

If your tables become corrupted frequently, you should try to

determine why this is happening. The most important thing to

know is whether the table became corrupted as a result of a

server crash. You can verify this easily by looking for a

recent restarted mysqld message in the

error log. If there is such a message, it is likely that table

corruption is a result of the server dying. Otherwise,

corruption may have occurred during normal operation. This is

a bug. You should try to create a reproducible test case that

demonstrates the problem. See Section A.4.2, “What to Do If MySQL Keeps Crashing”, and

Section E.1.6, “Making a Test Case If You Experience Table Corruption”.

Each MyISAM index file

(.MYI file) has a counter in the header

that can be used to check whether a table has been closed

properly. If you get the following warning from CHECK

TABLE or myisamchk, it means that

this counter has gone out of sync:

clients are using or haven't closed the table properly

This warning doesn't necessarily mean that the table is corrupted, but you should at least check the table.

The counter works as follows:

The first time a table is updated in MySQL, a counter in the header of the index files is incremented.

The counter is not changed during further updates.

When the last instance of a table is closed (because a

FLUSH TABLESoperation was performed or because there is no room in the table cache), the counter is decremented if the table has been updated at any point.When you repair the table or check the table and it is found to be okay, the counter is reset to zero.

To avoid problems with interaction with other processes that might check the table, the counter is not decremented on close if it was zero.

In other words, the counter can become incorrect only under these conditions:

A

MyISAMtable is copied without first issuingLOCK TABLESandFLUSH TABLES.MySQL has crashed between an update and the final close. (Note that the table may still be okay, because MySQL always issues writes for everything between each statement.)

A table was modified by myisamchk --recover or myisamchk --update-state at the same time that it was in use by mysqld.

Multiple mysqld servers are using the table and one server performed a

REPAIR TABLEorCHECK TABLEon the table while it was in use by another server. In this setup, it is safe to useCHECK TABLE, although you might get the warning from other servers. However,REPAIR TABLEshould be avoided because when one server replaces the data file with a new one, this is not known to the other servers.In general, it is a bad idea to share a data directory among multiple servers. See Section 5.13, “Running Multiple MySQL Servers on the Same Machine”, for additional discussion.

- 14.2.1.

InnoDBOverview - 14.2.2.

InnoDBContact Information - 14.2.3.

InnoDBConfiguration - 14.2.4.

InnoDBStartup Options and System Variables - 14.2.5. Creating the

InnoDBTablespace - 14.2.6. Creating and Using

InnoDBTables - 14.2.7. Adding and Removing

InnoDBData and Log Files - 14.2.8. Backing Up and Recovering an

InnoDBDatabase - 14.2.9. Moving an

InnoDBDatabase to Another Machine - 14.2.10.

InnoDBTransaction Model and Locking - 14.2.11.

InnoDBPerformance Tuning Tips - 14.2.12. Implementation of Multi-Versioning

- 14.2.13.

InnoDBTable and Index Structures - 14.2.14.

InnoDBFile Space Management and Disk I/O - 14.2.15.

InnoDBError Handling - 14.2.16. Restrictions on

InnoDBTables - 14.2.17.

InnoDBTroubleshooting

InnoDB provides MySQL with a transaction-safe

(ACID compliant) storage engine that has

commit, rollback, and crash recovery capabilities.

InnoDB does locking on the row level and also

provides an Oracle-style consistent non-locking read in

SELECT statements. These features increase

multi-user concurrency and performance. There is no need for lock

escalation in InnoDB because row-level locks

fit in very little space. InnoDB also supports

FOREIGN KEY constraints. You can freely mix

InnoDB tables with tables from other MySQL

storage engines, even within the same statement.

InnoDB has been designed for maximum

performance when processing large data volumes. Its CPU efficiency

is probably not matched by any other disk-based relational

database engine.

Fully integrated with MySQL Server, the InnoDB

storage engine maintains its own buffer pool for caching data and

indexes in main memory. InnoDB stores its

tables and indexes in a tablespace, which may consist of several

files (or raw disk partitions). This is different from, for

example, MyISAM tables where each table is

stored using separate files. InnoDB tables can

be of any size even on operating systems where file size is

limited to 2GB.

InnoDB is included in binary distributions by

default. The Windows Essentials installer makes

InnoDB the MySQL default storage engine on

Windows.

InnoDB is used in production at numerous large

database sites requiring high performance. The famous Internet

news site Slashdot.org runs on InnoDB. Mytrix,

Inc. stores over 1TB of data in InnoDB, and

another site handles an average load of 800 inserts/updates per

second in InnoDB.

InnoDB is published under the same GNU GPL

License Version 2 (of June 1991) as MySQL. For more information on

MySQL licensing, see

http://www.mysql.com/company/legal/licensing/.

Additional resources

A forum dedicated to the

InnoDBstorage engine is available at http://forums.mysql.com/list.php?22.

Contact information for Innobase Oy, producer of the

InnoDB engine:

Web site: http://www.innodb.com/

Email: <sales@innodb.com>

Phone: +358-9-6969 3250 (office)

+358-40-5617367 (mobile)

Innobase Oy Inc.

World Trade Center Helsinki

Aleksanterinkatu 17

P.O.Box 800

00101 Helsinki

Finland

The InnoDB storage engine is enabled by

default. If you don't want to use InnoDB

tables, you can add the skip-innodb option to

your MySQL option file.

Note: InnoDB

provides MySQL with a transaction-safe (ACID

compliant) storage engine that has commit, rollback, and crash

recovery capabilities. However, it cannot do

so if the underlying operating system or hardware does

not work as advertised. Many operating systems or disk subsystems

may delay or reorder write operations to improve performance. On

some operating systems, the very system call that should wait

until all unwritten data for a file has been flushed —

fsync() — might actually return before

the data has been flushed to stable storage. Because of this, an

operating system crash or a power outage may destroy recently

committed data, or in the worst case, even corrupt the database

because of write operations having been reordered. If data

integrity is important to you, you should perform some

“pull-the-plug” tests before using anything in

production. On Mac OS X 10.3 and up, InnoDB

uses a special fcntl() file flush method. Under

Linux, it is advisable to disable the

write-back cache.

On ATAPI hard disks, a command such hdparm -W0

/dev/hda may work to disable the write-back cache.

Beware that some drives or disk controllers

may be unable to disable the write-back cache.

Two important disk-based resources managed by the

InnoDB storage engine are its tablespace data

files and its log files.

Note: If you specify no

InnoDB configuration options, MySQL creates an

auto-extending 10MB data file named ibdata1

and two 5MB log files named ib_logfile0 and

ib_logfile1 in the MySQL data directory. To

get good performance, you should explicitly provide

InnoDB parameters as discussed in the following

examples. Naturally, you should edit the settings to suit your

hardware and requirements.

The examples shown here are representative. See

Section 14.2.4, “InnoDB Startup Options and System Variables” for additional information

about InnoDB-related configuration parameters.

To set up the InnoDB tablespace files, use the

innodb_data_file_path option in the

[mysqld] section of the

my.cnf option file. On Windows, you can use

my.ini instead. The value of

innodb_data_file_path should be a list of one

or more data file specifications. If you name more than one data

file, separate them by semicolon

(‘;’) characters:

innodb_data_file_path=datafile_spec1[;datafile_spec2]...

For example, a setting that explicitly creates a tablespace having the same characteristics as the default is as follows:

[mysqld] innodb_data_file_path=ibdata1:10M:autoextend

This setting configures a single 10MB data file named

ibdata1 that is auto-extending. No location

for the file is given, so by default, InnoDB

creates it in the MySQL data directory.

Sizes are specified using M or

G suffix letters to indicate units of MB or GB.

A tablespace containing a fixed-size 50MB data file named

ibdata1 and a 50MB auto-extending file named

ibdata2 in the data directory can be

configured like this:

[mysqld] innodb_data_file_path=ibdata1:50M;ibdata2:50M:autoextend

The full syntax for a data file specification includes the filename, its size, and several optional attributes:

file_name:file_size[:autoextend[:max:max_file_size]]

The autoextend attribute and those following

can be used only for the last data file in the

innodb_data_file_path line.

If you specify the autoextend option for the

last data file, InnoDB extends the data file if

it runs out of free space in the tablespace. The increment is 8MB

at a time by default. It can be modified by changing the

innodb_autoextend_increment system variable.

If the disk becomes full, you might want to add another data file

on another disk. Instructions for reconfiguring an existing

tablespace are given in Section 14.2.7, “Adding and Removing InnoDB Data and Log Files”.

InnoDB is not aware of the filesystem maximum

file size, so be cautious on filesystems where the maximum file

size is a small value such as 2GB. To specify a maximum size for

an auto-extending data file, use the max

attribute. The following configuration allows

ibdata1 to grow up to a limit of 500MB:

[mysqld] innodb_data_file_path=ibdata1:10M:autoextend:max:500M

InnoDB creates tablespace files in the MySQL

data directory by default. To specify a location explicitly, use

the innodb_data_home_dir option. For example,

to use two files named ibdata1 and

ibdata2 but create them in the

/ibdata directory, configure

InnoDB like this:

[mysqld] innodb_data_home_dir = /ibdata innodb_data_file_path=ibdata1:50M;ibdata2:50M:autoextend

Note: InnoDB

does not create directories, so make sure that the

/ibdata directory exists before you start the

server. This is also true of any log file directories that you

configure. Use the Unix or DOS mkdir command to

create any necessary directories.

InnoDB forms the directory path for each data

file by textually concatenating the value of

innodb_data_home_dir to the data file name,

adding a pathname separator (slash or backslash) between values if

necessary. If the innodb_data_home_dir option

is not mentioned in my.cnf at all, the

default value is the “dot” directory

./, which means the MySQL data directory.

(The MySQL server changes its current working directory to its

data directory when it begins executing.)

If you specify innodb_data_home_dir as an empty

string, you can specify absolute paths for the data files listed

in the innodb_data_file_path value. The

following example is equivalent to the preceding one:

[mysqld] innodb_data_home_dir = innodb_data_file_path=/ibdata/ibdata1:50M;/ibdata/ibdata2:50M:autoextend

A simple my.cnf

example. Suppose that you have a computer with 128MB

RAM and one hard disk. The following example shows possible

configuration parameters in my.cnf or

my.ini for InnoDB,

including the autoextend attribute. The example

suits most users, both on Unix and Windows, who do not want to

distribute InnoDB data files and log files onto

several disks. It creates an auto-extending data file

ibdata1 and two InnoDB log

files ib_logfile0 and

ib_logfile1 in the MySQL data directory.

Also, the small archived InnoDB log file

ib_arch_log_0000000000 that

InnoDB creates automatically ends up in the

data directory.

[mysqld] # You can write your other MySQL server options here # ... # Data files must be able to hold your data and indexes. # Make sure that you have enough free disk space. innodb_data_file_path = ibdata1:10M:autoextend # # Set buffer pool size to 50-80% of your computer's memory innodb_buffer_pool_size=70M innodb_additional_mem_pool_size=10M # # Set the log file size to about 25% of the buffer pool size innodb_log_file_size=20M innodb_log_buffer_size=8M # innodb_flush_log_at_trx_commit=1

Make sure that the MySQL server has the proper access rights to create files in the data directory. More generally, the server must have access rights in any directory where it needs to create data files or log files.

Note that data files must be less than 2GB in some filesystems. The combined size of the log files must be less than 4GB. The combined size of data files must be at least 10MB.

When you create an InnoDB tablespace for the

first time, it is best that you start the MySQL server from the

command prompt. InnoDB then prints the

information about the database creation to the screen, so you can

see what is happening. For example, on Windows, if

mysqld is located in C:\Program

Files\MySQL\MySQL Server 5.0\bin, you can

start it like this:

C:\> "C:\Program Files\MySQL\MySQL Server 5.0\bin\mysqld" --console

If you do not send server output to the screen, check the server's

error log to see what InnoDB prints during the

startup process.

See Section 14.2.5, “Creating the InnoDB Tablespace”, for an example of what the

information displayed by InnoDB should look

like.

You can place InnoDB options in the

[mysqld] group of any option file that your

server reads when it starts. The locations for option files are

described in Section 4.3.2, “Using Option Files”.

If you installed MySQL on Windows using the installation and

configuration wizards, the option file will be the

my.ini file located in your MySQL

installation directory. See

Section 2.3.4.14, “The Location of the my.ini File”.

If your PC uses a boot loader where the C:

drive is not the boot drive, your only option is to use the

my.ini file in your Windows directory

(typically C:\WINDOWS or

C:\WINNT). You can use the

SET command at the command prompt in a console

window to print the value of WINDIR:

C:\> SET WINDIR

windir=C:\WINDOWS

If you want to make sure that mysqld reads

options only from a specific file, you can use the

--defaults-file option as the first option on the

command line when starting the server:

mysqld --defaults-file=your_path_to_my_cnf

An advanced my.cnf

example. Suppose that you have a Linux computer with

2GB RAM and three 60GB hard disks at directory paths

/, /dr2 and

/dr3. The following example shows possible

configuration parameters in my.cnf for

InnoDB.

[mysqld] # You can write your other MySQL server options here # ... innodb_data_home_dir = # # Data files must be able to hold your data and indexes innodb_data_file_path = /ibdata/ibdata1:2000M;/dr2/ibdata/ibdata2:2000M:autoextend # # Set buffer pool size to 50-80% of your computer's memory, # but make sure on Linux x86 total memory usage is < 2GB innodb_buffer_pool_size=1G innodb_additional_mem_pool_size=20M innodb_log_group_home_dir = /dr3/iblogs # innodb_log_files_in_group = 2 # # Set the log file size to about 25% of the buffer pool size innodb_log_file_size=250M innodb_log_buffer_size=8M # innodb_flush_log_at_trx_commit=1 innodb_lock_wait_timeout=50 # # Uncomment the next lines if you want to use them #innodb_thread_concurrency=5

In some cases, database performance improves the if all data is

not placed on the same physical disk. Putting log files on a

different disk from data is very often beneficial for performance.

The example illustrates how to do this. It places the two data

files on different disks and places the log files on the third

disk. InnoDB fills the tablespace beginning

with the first data file. You can also use raw disk partitions

(raw devices) as InnoDB data files, which may

speed up I/O. See Section 14.2.3.2, “Using Raw Devices for the Shared Tablespace”.

Warning: On 32-bit GNU/Linux x86,

you must be careful not to set memory usage too high.

glibc may allow the process heap to grow over

thread stacks, which crashes your server. It is a risk if the

value of the following expression is close to or exceeds 2GB:

innodb_buffer_pool_size + key_buffer_size + max_connections*(sort_buffer_size+read_buffer_size+binlog_cache_size) + max_connections*2MB

Each thread uses a stack (often 2MB, but only 256KB in MySQL AB

binaries) and in the worst case also uses

sort_buffer_size + read_buffer_size additional

memory.

By compiling MySQL yourself, you can use up to 64GB of physical

memory in 32-bit Windows. See the description for

innodb_buffer_pool_awe_mem_mb in

Section 14.2.4, “InnoDB Startup Options and System Variables”.

How to tune other mysqld server parameters? The following values are typical and suit most users:

[mysqld]

skip-external-locking

max_connections=200

read_buffer_size=1M

sort_buffer_size=1M

#

# Set key_buffer to 5 - 50% of your RAM depending on how much

# you use MyISAM tables, but keep key_buffer_size + InnoDB

# buffer pool size < 80% of your RAM

key_buffer_size=value

You can store each InnoDB table and its

indexes in its own file. This feature is called “multiple

tablespaces” because in effect each table has its own

tablespace.

Using multiple tablespaces can be beneficial to users who want

to move specific tables to separate physical disks or who wish

to restore backups of single tables quickly without interrupting

the use of the remaining InnoDB tables.

You can enable multiple tablespaces by adding this line to the

[mysqld] section of

my.cnf:

[mysqld] innodb_file_per_table

After restarting the server, InnoDB stores

each newly created table into its own file

tbl_name.ibdMyISAM storage engine does, but

MyISAM divides the table into a data file

tbl_name.MYDtbl_name.MYIInnoDB, the data and the indexes are

stored together in the .ibd file. The

tbl_name.frm

If you remove the innodb_file_per_table line

from my.cnf and restart the server,

InnoDB creates tables inside the shared

tablespace files again.

innodb_file_per_table affects only table

creation, not access to existing tables. If you start the server

with this option, new tables are created using

.ibd files, but you can still access tables

that exist in the shared tablespace. If you remove the option

and restart the server, new tables are created in the shared

tablespace, but you can still access any tables that were

created using multiple tablespaces.

InnoDB always needs the shared tablespace

because it puts its internal data dictionary and undo logs

there. The .ibd files are not sufficient

for InnoDB to operate.

Note: You cannot freely move

.ibd files between database directories as

you can with MyISAM table files. This is

because the table definition that is stored in the

InnoDB shared tablespace includes the

database name, and because InnoDB must

preserve the consistency of transaction IDs and log sequence

numbers.

To move an .ibd file and the associated

table from one database to another, use a RENAME

TABLE statement:

RENAME TABLEdb1.tbl_nameTOdb2.tbl_name;

If you have a “clean” backup of an

.ibd file, you can restore it to the MySQL

installation from which it originated as follows:

Issue this

ALTER TABLEstatement:ALTER TABLE

tbl_nameDISCARD TABLESPACE;Caution: This statement deletes the current

.ibdfile.Put the backup

.ibdfile back in the proper database directory.Issue this

ALTER TABLEstatement:ALTER TABLE

tbl_nameIMPORT TABLESPACE;

In this context, a “clean”

.ibd file backup means:

There are no uncommitted modifications by transactions in the

.ibdfile.There are no unmerged insert buffer entries in the

.ibdfile.Purge has removed all delete-marked index records from the

.ibdfile.mysqld has flushed all modified pages of the

.ibdfile from the buffer pool to the file.

You can make a clean backup .ibd file using

the following method:

Stop all activity from the mysqld server and commit all transactions.

Wait until

SHOW ENGINE INNODB STATUSshows that there are no active transactions in the database, and the main thread status ofInnoDBisWaiting for server activity. Then you can make a copy of the.ibdfile.

Another method for making a clean copy of an

.ibd file is to use the commercial

InnoDB Hot Backup tool:

Use InnoDB Hot Backup to back up the

InnoDBinstallation.Start a second mysqld server on the backup and let it clean up the

.ibdfiles in the backup.

You can use raw disk partitions as data files in the shared tablespace. By using a raw disk, you can perform non-buffered I/O on Windows and on some Unix systems without filesystem overhead, which may improve performance.

When you create a new data file, you must put the keyword

newraw immediately after the data file size

in innodb_data_file_path. The partition must

be at least as large as the size that you specify. Note that 1MB

in InnoDB is 1024 × 1024 bytes, whereas

1MB in disk specifications usually means 1,000,000 bytes.

[mysqld] innodb_data_home_dir= innodb_data_file_path=/dev/hdd1:3Gnewraw;/dev/hdd2:2Gnewraw

The next time you start the server, InnoDB

notices the newraw keyword and initializes

the new partition. However, do not create or change any

InnoDB tables yet. Otherwise, when you next

restart the server, InnoDB reinitializes the

partition and your changes are lost. (As a safety measure

InnoDB prevents users from modifying data

when any partition with newraw is specified.)

After InnoDB has initialized the new

partition, stop the server, change newraw in

the data file specification to raw:

[mysqld] innodb_data_home_dir= innodb_data_file_path=/dev/hdd1:5Graw;/dev/hdd2:2Graw

Then restart the server and InnoDB allows

changes to be made.

On Windows, you can allocate a disk partition as a data file like this:

[mysqld] innodb_data_home_dir= innodb_data_file_path=//./D::10Gnewraw

The //./ corresponds to the Windows syntax

of \\.\ for accessing physical drives.

When you use raw disk partitions, be sure that they have permissions that allow read and write access by the account used for running the MySQL server.

This section describes the InnoDB-related

command options and system variables. System variables that are

true or false can be enabled at server startup by naming them, or

disabled by using a skip- prefix. For example,

to enable or disable InnoDB checksums, you can

use --innodb_checksums or

--skip-innodb_checksums on the command line, or

innodb_checksums or

skip-innodb_checksums in an option file. System

variables that take a numeric value can be specified as

--

on the command line or as

var_name=valuevar_name=value

InnoDB command options:

Enables the

InnoDBstorage engine, if the server was compiled withInnoDBsupport. Use--skip-innodbto disableInnoDB.Causes

InnoDBto create a file named<datadir>/innodb_status.<pid>InnoDBperiodically writes the output ofSHOW ENGINE INNODB STATUSto this file.

InnoDB system variables:

innodb_additional_mem_pool_sizeThe size in bytes of a memory pool

InnoDBuses to store data dictionary information and other internal data structures. The more tables you have in your application, the more memory you need to allocate here. IfInnoDBruns out of memory in this pool, it starts to allocate memory from the operating system and writes warning messages to the MySQL error log. The default value is 1MB.innodb_autoextend_incrementThe increment size (in MB) for extending the size of an auto-extending tablespace when it becomes full. The default value is 8.

innodb_buffer_pool_awe_mem_mbThe size of the buffer pool (in MB), if it is placed in the AWE memory. This is relevant only in 32-bit Windows. If your 32-bit Windows operating system supports more than 4GB memory, using so-called “Address Windowing Extensions,” you can allocate the

InnoDBbuffer pool into the AWE physical memory using this variable. The maximum possible value for this variable is 63000. If it is greater than 0,innodb_buffer_pool_sizeis the window in the 32-bit address space of mysqld whereInnoDBmaps that AWE memory. A good value forinnodb_buffer_pool_sizeis 500MB.To take advantage of AWE memory, you will need to recompile MySQL yourself. The current project settings needed for doing this can be found in the

innobase/os/os0proj.csource file.innodb_buffer_pool_sizeThe size in bytes of the memory buffer

InnoDBuses to cache data and indexes of its tables. The larger you set this value, the less disk I/O is needed to access data in tables. On a dedicated database server, you may set this to up to 80% of the machine physical memory size. However, do not set it too large because competition for physical memory might cause paging in the operating system.innodb_checksumsInnoDBcan use checksum validation on all pages read from the disk to ensure extra fault tolerance against broken hardware or data files. This validation is enabled by default. However, under some rare circumstances (such as when running benchmarks) this extra safety feature is unneeded and can be disabled with--skip-innodb_checksums. This variable was added in MySQL 5.0.3.innodb_commit_concurrencyThe number of threads that can commit at the same time. A value of 0 disables concurrency control. This variable was added in MySQL 5.0.12.

innodb_concurrency_ticketsThe number of threads that can enter

InnoDBconcurrently is determined by theinnodb_thread_concurrencyvariable. A thread is placed in a queue when it tries to enterInnoDBif the number of threads has already reached the concurrency limit. When a thread is allowed to enterInnoDB, it is given a number of “free tickets” equal to the value ofinnodb_concurrency_tickets, and the thread can enter and leaveInnoDBfreely until it has used up its tickets. After that point, the thread again becomes subject to the concurrency check (and possible queuing) the next time it tries to enterInnoDB. This variable was added in MySQL 5.0.3.innodb_data_file_pathThe paths to individual data files and their sizes. The full directory path to each data file is formed by concatenating

innodb_data_home_dirto each path specified here. The file sizes are specified in MB or GB (1024MB) by appendingMorGto the size value. The sum of the sizes of the files must be at least 10MB. If you do not specifyinnodb_data_file_path, the default behavior is to create a single 10MB auto-extending data file namedibdata1. The size limit of individual files is determined by your operating system. You can set the file size to more than 4GB on those operating systems that support big files. You can also use raw disk partitions as data files. See Section 14.2.3.2, “Using Raw Devices for the Shared Tablespace”.innodb_data_home_dirThe common part of the directory path for all

InnoDBdata files. If you do not set this value, the default is the MySQL data directory. You can specify the value as an empty string, in which case you can use absolute file paths ininnodb_data_file_path.innodb_doublewriteBy default,

InnoDBstores all data twice, first to the doublewrite buffer, and then to the actual data files. This variable is enabled by default. It can be turned off with--skip-innodb_doublewritefor benchmarks or cases when top performance is needed rather than concern for data integrity or possible failures. This variable was added in MySQL 5.0.3.innodb_fast_shutdownIf you set this variable to 0,

InnoDBdoes a full purge and an insert buffer merge before a shutdown. These operations can take minutes, or even hours in extreme cases. If you set this variable to 1,InnoDBskips these operations at shutdown. The default value is 1. If you set it to 2,InnoDBwill just flush its logs and then shut down cold, as if MySQL had crashed; no committed transaction will be lost, but crash recovery will be done at the next startup. The value of 2 can be used as of MySQL 5.0.5, except that it cannot be used on NetWare.innodb_file_io_threadsThe number of file I/O threads in

InnoDB. Normally, this should be left at the default value of 4, but disk I/O on Windows may benefit from a larger number. On Unix, increasing the number has no effect;InnoDBalways uses the default value.innodb_file_per_tableIf this variable is enabled,

InnoDBcreates each new table using its own.ibdfile for storing data and indexes, rather than in the shared tablespace. The default is to create tables in the shared tablespace. See Section 14.2.3.1, “Using Per-Table Tablespaces”.innodb_flush_log_at_trx_commitWhen

innodb_flush_log_at_trx_commitis set to 0, the log buffer is written out to the log file once per second and the flush to disk operation is performed on the log file, but nothing is done at a transaction commit. When this value is 1 (the default), the log buffer is written out to the log file at each transaction commit and the flush to disk operation is performed on the log file. When set to 2, the log buffer is written out to the file at each commit, but the flush to disk operation is not performed on it. However, the flushing on the log file takes place once per second also when the value is 2. Note that the once-per-second flushing is not 100% guaranteed to happen every second, due to process scheduling issues.The default value of this variable is 1, which is the value that is required for ACID compliance. You can achieve better performance by setting the value different from 1, but then you can lose at most one second worth of transactions in a crash. If you set the value to 0, then any mysqld process crash can erase the last second of transactions. If you set the value to 2, then only an operating system crash or a power outage can erase the last second of transactions. However,

InnoDB's crash recovery is not affected and thus crash recovery does work regardless of the value. Note that many operating systems and some disk hardware fool the flush-to-disk operation. They may tell mysqld that the flush has taken place, even though it has not. Then the durability of transactions is not guaranteed even with the setting 1, and in the worst case a power outage can even corrupt theInnoDBdatabase. Using a battery-backed disk cache in the SCSI disk controller or in the disk itself speeds up file flushes, and makes the operation safer. You can also try using the Unix command hdparm to disable the caching of disk writes in hardware caches, or use some other command specific to the hardware vendor.innodb_flush_methodIf set to

fdatasync(the default),InnoDBusesfsync()to flush both the data and log files. If set toO_DSYNC,InnoDBusesO_SYNCto open and flush the log files, but usesfsync()to flush the data files. IfO_DIRECTis specified (available on some GNU/Linux versions),InnoDBusesO_DIRECTto open the data files, and usesfsync()to flush both the data and log files. Note thatInnoDBusesfsync()instead offdatasync(), and it does not useO_DSYNCby default because there have been problems with it on many varieties of Unix. This variable is relevant only for Unix. On Windows, the flush method is alwaysasync_unbufferedand cannot be changed.innodb_force_recoveryThe crash recovery mode. Warning: This variable should be set greater than 0 only in an emergency situation when you want to dump your tables from a corrupt database! Possible values are from 1 to 6. The meanings of these values are described in Section 14.2.8.1, “Forcing

InnoDBRecovery”. As a safety measure,InnoDBprevents any changes to its data when this variable is greater than 0.innodb_lock_wait_timeoutThe timeout in seconds an

InnoDBtransaction may wait for a lock before being rolled back.InnoDBautomatically detects transaction deadlocks in its own lock table and rolls back the transaction.InnoDBnotices locks set using theLOCK TABLESstatement. The default is 50 seconds.Note: For the greatest possible durability and consistency in a replication setup using

InnoDBwith transactions, you should useinnodb_flush_log_at_trx_commit=1,sync_binlog=1, and, before MySQL 5.0.3,innodb_safe_binlogin your master servermy.cnffile. (innodb_safe_binlogis not needed from 5.0.3 on.)innodb_locks_unsafe_for_binlogThis variable controls next-key locking in

InnoDBsearches and index scans. By default, this variable is 0 (disabled), which means that next-key locking is enabled.Normally,

InnoDBuses an algorithm called next-key locking.InnoDBperforms row-level locking in such a way that when it searches or scans a table index, it sets shared or exclusive locks on any index records it encounters. Thus, the row-level locks are actually index record locks. The locks thatInnoDBsets on index records also affect the “gap” preceding that index record. If a user has a shared or exclusive lock on record R in an index, another user cannot insert a new index record immediately before R in the order of the index. Enabling this variable causesInnoDBnot to use next-key locking in searches or index scans. Next-key locking is still used to ensure foreign key constraints and duplicate key checking. Note that enabling this variable may cause phantom problems: Suppose that you want to read and lock all children from thechildtable with an identifier value larger than 100, with the intention of updating some column in the selected rows later:SELECT * FROM child WHERE id > 100 FOR UPDATE;

Suppose that there is an index on the

idcolumn. The query scans that index starting from the first record whereidis greater than 100. If the locks set on the index records do not lock out inserts made in the gaps, another client can insert a new row into the table. If you execute the sameSELECTwithin the same transaction, you see a new row in the result set returned by the query. This also means that if new items are added to the database,InnoDBdoes not guarantee serializability. Therefore, if this variable is enabledInnoDBguarantees at most isolation levelREAD COMMITTED. (Conflict serializability is still guaranteed.)Starting from MySQL 5.0.2, this option is even more unsafe.

InnoDBin anUPDATEor aDELETEonly locks rows that it updates or deletes. This greatly reduces the probability of deadlocks, but they can happen. Note that enabling this variable still does not allow operations such asUPDATEto overtake other similar operations (such as anotherUPDATE) even in the case when they affect different rows. Consider the following example, beginning with this table:CREATE TABLE A(A INT NOT NULL, B INT) ENGINE = InnoDB; INSERT INTO A VALUES (1,2),(2,3),(3,2),(4,3),(5,2); COMMIT;

Suppose that one client executes these statements:

SET AUTOCOMMIT = 0; UPDATE A SET B = 5 WHERE B = 3;

Then suppose that another client executes these statements following those of the first client:

SET AUTOCOMMIT = 0; UPDATE A SET B = 4 WHERE B = 2;

In this case, the second

UPDATEmust wait for a commit or rollback of the firstUPDATE. The firstUPDATEhas an exclusive lock on row (2,3), and the secondUPDATEwhile scanning rows also tries to acquire an exclusive lock for the same row, which it cannot have. This is becauseUPDATEtwo first acquires an exclusive lock on a row and then determines whether the row belongs to the result set. If not, it releases the unnecessary lock, when theinnodb_locks_unsafe_for_binlogvariable is enabled.Therefore,

InnoDBexecutesUPDATEone as follows:x-lock(1,2) unlock(1,2) x-lock(2,3) update(2,3) to (2,5) x-lock(3,2) unlock(3,2) x-lock(4,3) update(4,3) to (4,5) x-lock(5,2) unlock(5,2)

InnoDBexecutesUPDATEtwo as follows:x-lock(1,2) update(1,2) to (1,4) x-lock(2,3) - wait for query one to commit or rollback

innodb_log_arch_dirThe directory where fully written log files would be archived if we used log archiving. If used, the value of this variable should be set the same as

innodb_log_group_home_dir. However, it is not required.innodb_log_archiveWhether to log

InnoDBarchive files. This variable is present for historical reasons, but is unused. Recovery from a backup is done by MySQL using its own log files, so there is no need to archiveInnoDBlog files. The default for this variable is 0.innodb_log_buffer_sizeThe size in bytes of the buffer that

InnoDBuses to write to the log files on disk. Sensible values range from 1MB to 8MB. The default is 1MB. A large log buffer allows large transactions to run without a need to write the log to disk before the transactions commit. Thus, if you have big transactions, making the log buffer larger saves disk I/O.innodb_log_file_sizeThe size in bytes of each log file in a log group. The combined size of log files must be less than 4GB on 32-bit computers. The default is 5MB. Sensible values range from 1MB to 1/

N-th of the size of the buffer pool, whereNis the number of log files in the group. The larger the value, the less checkpoint flush activity is needed in the buffer pool, saving disk I/O. But larger log files also mean that recovery is slower in case of a crash.innodb_log_files_in_groupThe number of log files in the log group.

InnoDBwrites to the files in a circular fashion. The default (and recommended) is 2.innodb_log_group_home_dirThe directory path to the

InnoDBlog files. It must have the same value asinnodb_log_arch_dir. If you do not specify anyInnoDBlog variables, the default is to create two 5MB files namesib_logfile0andib_logfile1in the MySQL data directory.innodb_max_dirty_pages_pctThis is an integer in the range from 0 to 100. The default is 90. The main thread in

InnoDBtries to write pages from the buffer pool so that the percentage of dirty (not yet written) pages will not exceed this value.innodb_max_purge_lagThis variable controls how to delay

INSERT,UPDATEandDELETEoperations when the purge operations are lagging (see Section 14.2.12, “Implementation of Multi-Versioning”). The default value of this variable is 0, meaning that there are no delays.The

InnoDBtransaction system maintains a list of transactions that have delete-marked index records byUPDATEorDELETEoperations. Let the length of this list bepurge_lag. Whenpurge_lagexceedsinnodb_max_purge_lag, eachINSERT,UPDATEandDELETEoperation is delayed by ((purge_lag/innodb_max_purge_lag)×10)–5 milliseconds. The delay is computed in the beginning of a purge batch, every ten seconds. The operations are not delayed if purge cannot run because of an old consistent read view that could see the rows to be purged.A typical setting for a problematic workload might be 1 million, assuming that our transactions are small, only 100 bytes in size, and we can allow 100MB of unpurged rows in our tables.

innodb_mirrored_log_groupsThe number of identical copies of log groups to keep for the database. Currently, this should be set to 1.

innodb_open_filesThis variable is relevant only if you use multiple tablespaces in

InnoDB. It specifies the maximum number of.ibdfiles thatInnoDBcan keep open at one time. The minimum value is 10. The default is 300.The file descriptors used for

.ibdfiles are forInnoDBonly. They are independent of those specified by the--open-files-limitserver option, and do not affect the operation of the table cache.innodb_safe_binlogAdds consistency guarantees between the content of

InnoDBtables and the binary log. See Section 5.12.3, “The Binary Log”. This variable was removed in MySQL 5.0.3, having been made obsolete by the introduction of XA transaction support.innodb_support_xaWhen set to

ONor 1 (the default), this variable enablesInnoDBsupport for two-phase commit in XA transactions. Enablinginnodb_support_xacauses an extra disk flush for transaction preparation. If you don't care about using XA, you can disable this variable by setting it toOFFor 0 to reduce the number of disk flushes and get betterInnoDBperformance. This variable was added in MySQL 5.0.3.innodb_sync_spin_loopsThe number of times a thread waits for an

InnoDBmutex to be freed before the thread is suspended. This variable was added in MySQL 5.0.3.innodb_table_locksInnoDBhonorsLOCK TABLES; MySQL does not return fromLOCK TABLE .. WRITEuntil all other threads have released all their locks to the table. The default value is 1, which means thatLOCK TABLEScausesInnoDBto lock a table internally. In applications usingAUTOCOMMIT=1,InnoDB's internal table locks can cause deadlocks. You can setinnodb_table_locks=0in the server option file to remove that problem.innodb_thread_concurrencyInnoDBtries to keep the number of operating system threads concurrently insideInnoDBless than or equal to the limit given by this variable. Before MySQL 5.0.8, the default value is 8. If you have performance issues, andSHOW ENGINE INNODB STATUSreveals many threads waiting for semaphores, you may have thread “thrashing” and should try setting this variable lower or higher. If you have a computer with many processors and disks, you can try setting the value higher to make better use of your computer's resources. A recommended value is the sum of the number of processors and disks your system has. A value of 500 or greater disables concurrency checking. Starting with MySQL 5.0.8, the default value is 20, and concurrency checking will be disabled if the setting is greater than or equal to 20.innodb_thread_sleep_delayHow long

InnoDBthreads sleep before joining theInnoDBqueue, in microseconds. The default value is 10,000. A value of 0 disables sleep. This variable was added in MySQL 5.0.3.sync_binlogIf the value of this variable is positive, the MySQL server synchronizes its binary log to disk (

fdatasync()) after everysync_binlogwrites to this binary log. Note that there is one write to the binary log per statement if in autocommit mode, and otherwise one write per transaction. The default value is 0 which does no synchronizing to disk. A value of 1 is the safest choice, because in the event of a crash you lose at most one statement/transaction from the binary log; however, it is also the slowest choice (unless the disk has a battery-backed cache, which makes synchronization very fast).

Suppose that you have installed MySQL and have edited your option

file so that it contains the necessary InnoDB

configuration parameters. Before starting MySQL, you should verify

that the directories you have specified for

InnoDB data files and log files exist and that

the MySQL server has access rights to those directories.

InnoDB does not create directories, only files.

Check also that you have enough disk space for the data and log

files.

It is best to run the MySQL server mysqld from

the command prompt when you first start the server with

InnoDB enabled, not from the

mysqld_safe wrapper or as a Windows service.

When you run from a command prompt you see what

mysqld prints and what is happening. On Unix,

just invoke mysqld. On Windows, use the

--console option.

When you start the MySQL server after initially configuring

InnoDB in your option file,

InnoDB creates your data files and log files,

and prints something like this:

InnoDB: The first specified datafile /home/heikki/data/ibdata1 did not exist: InnoDB: a new database to be created! InnoDB: Setting file /home/heikki/data/ibdata1 size to 134217728 InnoDB: Database physically writes the file full: wait... InnoDB: datafile /home/heikki/data/ibdata2 did not exist: new to be created InnoDB: Setting file /home/heikki/data/ibdata2 size to 262144000 InnoDB: Database physically writes the file full: wait... InnoDB: Log file /home/heikki/data/logs/ib_logfile0 did not exist: new to be created InnoDB: Setting log file /home/heikki/data/logs/ib_logfile0 size to 5242880 InnoDB: Log file /home/heikki/data/logs/ib_logfile1 did not exist: new to be created InnoDB: Setting log file /home/heikki/data/logs/ib_logfile1 size to 5242880 InnoDB: Doublewrite buffer not found: creating new InnoDB: Doublewrite buffer created InnoDB: Creating foreign key constraint system tables InnoDB: Foreign key constraint system tables created InnoDB: Started mysqld: ready for connections

At this point InnoDB has initialized its

tablespace and log files. You can connect to the MySQL server with

the usual MySQL client programs like mysql.

When you shut down the MySQL server with mysqladmin

shutdown, the output is like this:

010321 18:33:34 mysqld: Normal shutdown 010321 18:33:34 mysqld: Shutdown Complete InnoDB: Starting shutdown... InnoDB: Shutdown completed

You can look at the data file and log directories and you see the

files created there. The log directory also contains a small file

named ib_arch_log_0000000000. That file

resulted from the database creation, after which

InnoDB switched off log archiving. When MySQL

is started again, the data files and log files have been created

already, so the output is much briefer:

InnoDB: Started mysqld: ready for connections

If you add the innodb_file_per_table option to

my.cnf, InnoDB stores each

table in its own .ibd file in the same MySQL

database directory where the .frm file is

created. See Section 14.2.3.1, “Using Per-Table Tablespaces”.

If InnoDB prints an operating system error

during a file operation, usually the problem has one of the

following causes:

You did not create the

InnoDBdata file directory or theInnoDBlog directory.mysqld does not have access rights to create files in those directories.

mysqld cannot read the proper

my.cnformy.inioption file, and consequently does not see the options that you specified.The disk is full or a disk quota is exceeded.

You have created a subdirectory whose name is equal to a data file that you specified, so the name cannot be used as a filename.

There is a syntax error in the

innodb_data_home_dirorinnodb_data_file_pathvalue.

If something goes wrong when InnoDB attempts

to initialize its tablespace or its log files, you should delete

all files created by InnoDB. This means all

ibdata files and all

ib_logfile files. In case you have already

created some InnoDB tables, delete the

corresponding .frm files for these tables

(and any .ibd files if you are using

multiple tablespaces) from the MySQL database directories as

well. Then you can try the InnoDB database

creation again. It is best to start the MySQL server from a

command prompt so that you see what is happening.

To create an InnoDB table, specify an

ENGINE = InnoDB option in the CREATE

TABLE statement:

CREATE TABLE customers (a INT, b CHAR (20), INDEX (a)) ENGINE=InnoDB;

The older term TYPE is supported as a synonym

for ENGINE for backward compatibility, but

ENGINE is the preferred term and

TYPE is deprecated.

The statement creates a table and an index on column

a in the InnoDB tablespace

that consists of the data files that you specified in

my.cnf. In addition, MySQL creates a file

customers.frm in the

test directory under the MySQL database

directory. Internally, InnoDB adds an entry for

the table to its own data dictionary. The entry includes the

database name. For example, if test is the

database in which the customers table is

created, the entry is for 'test/customers'.

This means you can create a table of the same name

customers in some other database, and the table

names do not collide inside InnoDB.

You can query the amount of free space in the

InnoDB tablespace by issuing a SHOW

TABLE STATUS statement for any InnoDB

table. The amount of free space in the tablespace appears in the

Comment section in the output of SHOW

TABLE STATUS. For example:

SHOW TABLE STATUS FROM test LIKE 'customers'

Note that the statistics SHOW displays for

InnoDB tables are only approximate. They are

used in SQL optimization. Table and index reserved sizes in bytes

are accurate, though.

By default, each client that connects to the MySQL server begins

with autocommit mode enabled, which automatically commits every

SQL statement as you execute it. To use multiple-statement

transactions, you can switch autocommit off with the SQL

statement SET AUTOCOMMIT = 0 and use

COMMIT and ROLLBACK to

commit or roll back your transaction. If you want to leave

autocommit on, you can enclose your transactions within

START TRANSACTION and either

COMMIT or ROLLBACK. The

following example shows two transactions. The first is

committed; the second is rolled back.

shell>mysql testmysql>CREATE TABLE CUSTOMER (A INT, B CHAR (20), INDEX (A))->ENGINE=InnoDB;Query OK, 0 rows affected (0.00 sec) mysql>START TRANSACTION;Query OK, 0 rows affected (0.00 sec) mysql>INSERT INTO CUSTOMER VALUES (10, 'Heikki');Query OK, 1 row affected (0.00 sec) mysql>COMMIT;Query OK, 0 rows affected (0.00 sec) mysql>SET AUTOCOMMIT=0;Query OK, 0 rows affected (0.00 sec) mysql>INSERT INTO CUSTOMER VALUES (15, 'John');Query OK, 1 row affected (0.00 sec) mysql>ROLLBACK;Query OK, 0 rows affected (0.00 sec) mysql>SELECT * FROM CUSTOMER;+------+--------+ | A | B | +------+--------+ | 10 | Heikki | +------+--------+ 1 row in set (0.00 sec) mysql>

In APIs such as PHP, Perl DBI, JDBC, ODBC, or the standard C

call interface of MySQL, you can send transaction control

statements such as COMMIT to the MySQL server

as strings just like any other SQL statements such as

SELECT or INSERT. Some

APIs also offer separate special transaction commit and rollback

functions or methods.

Important: Do not convert MySQL system tables in the

mysql database (such as

user or host) to the

InnoDB type. This is an unsupported

operation. The system tables must always be of the

MyISAM type.

If you want all your (non-system) tables to be created as

InnoDB tables, you can simply add the line

default-storage-engine=innodb to the

[mysqld] section of your server option file.

InnoDB does not have a special optimization

for separate index creation the way the

MyISAM storage engine does. Therefore, it

does not pay to export and import the table and create indexes

afterward. The fastest way to alter a table to

InnoDB is to do the inserts directly to an

InnoDB table. That is, use ALTER

TABLE ... ENGINE=INNODB, or create an empty

InnoDB table with identical definitions and

insert the rows with INSERT INTO ... SELECT * FROM

....

If you have UNIQUE constraints on secondary

keys, you can speed up a table import by turning off the